Executive summary:

Polygon zkEVM is a revolutionary child project that aims to solve Ethereum's scalability issues by building a Zero Knowledge rollup with EVM equivalence. This project has been in development since mid-2022 and is set to go live on mainnet on March 27th.

The zkEVM project has been merged with Mir and Hermez protocols to create a powerful Layer 2 technology that promises to bring mass adoption of blockchain technology. The project has benefited significantly from the $1 billion fund allocated to its development by Polygon labs, which has allowed for the hiring of ZK experts and builders, as well as incentivizing projects and protocols to deploy on the new Layer 2.

By achieving a high degree of EVM equivalence, Polygon zkEVM provides developers and users with an experience similar to using Ethereum mainnet, with added benefits such as privacy, speed, and low transaction costs. This makes zkEVM stand out from its competitors as a highly efficient and user-friendly solution to Ethereum's scalability issues.

In this article, we will explore the technology behind Polygon zkEVM, its unique features, and how it sets itself apart from other Layer 2 solutions. Whether you're a blockchain enthusiast or simply curious about the latest developments in the industry, this article is sure to provide valuable insights into the past, present and future of the ZK technology.

The part 1 will focus on history of Scaling, mad to understand the zk technology and context?

Skip to directly go to Part 2 for Polygon zkEVM presentation.

I. Introduction to scaling, rollups and zkEVM

1. History of Scaling and Polygon Initiatives

Before we dive into the specifics of Polygon’s zkEVM, let’s have a look at how we got to where we are today and how Polygon’s scaling initiatives have evolved over time as new technologies have emerged.

Scaling the throughput of blockchain networks has been a main focus of research and development in the blockchain space for years. It is indisputable that to reach true mass adoption, blockchains need to be able to scale. But what exactly does that mean? Generally, scalability is the ability of a network to process a large amount of transactions quickly and at low cost. This consequently means that as more use cases arise and network adoption accelerates, the performance of the blockchain doesn’t suffer. Based on this definition, Ethereum for example lacks scalability.

With increasing network usage, gas prices on Ethereum have in the past skyrocketed to unsustainably high levels, pricing out many smaller users from interacting with decentralized applications entirely. This gave alternative, more “scalable” L1 blockchains a chance to eat into Ethereum’s market share, but also spurred innovation around increasing the throughput of the Ethereum network.

But can’t Ethereum just use more powerful hardware? Can’t we simply increase hardware requirements validator nodes that validate in a smaller set, thereby improving the network’s ability to verify the chain and hold its state? Well, we could. And it’s actually the approach that many alternative layer 1 chains have chosen to take (e.g. Solana). But the question is, at what cost does this scalability increase come? To understand that, it’s important to be familiar with the blockchain trilemma (visualized in figure 1 below). The concept refers to the idea that a blockchain cannot reach all three core qualities that any blockchain network should strive to have (scalability, security & decentralization) all at once.

What that means becomes clear if we think about the before-mentioned increase of hardware requirements. In order to scale throughput, an alt L1 chain for example that has decided to go with a more centralized network structure where users have to trust a smaller number of validators with high-spec machines, sacrifices decentralization & security for scalability. Additionally, with the need for more powerful hardware, running a node also becomes more expensive (hardware itself but also bandwidth & storage). This drastically impairs decentralization of the network as the barriers of entry increase and fewer people are able to participate in the network and validate transactions in the first place as they are priced out.

Since decentralization and inclusion are two core values of the Ethereum community and Ethereum is built on a culture of users verifying the chain, it is not very surprising that running the chain on a small set of high-spec nodes is not a suitable path for scaling Ethereum. Even Vitalik Buterin argues that it is “crucial for blockchain decentralization for regular users to be able to run a node”. Hence, other scaling approaches gained traction. The following subsections will explore these technologies in detail.

a. Side-Chains, Plasma & Polygon PoS

The idea behind sidechains is to operate an additional blockchain in conjunction with a primary blockchain (Ethereum). This means the two blockchains can communicate with each other, facilitating the movement of assets between the two chains. A side-chain operates as a distinct blockchain that functions independently from Ethereum and links to Ethereum mainnet through a two-way bridge. Side-chains generally have their own block parameters and consensus algorithms, which are frequently tailored for streamlined transaction processing and increased throughput. However, utilizing a side-chain also means making a trade-off as it does not inherit Ethereum's security features.

Polygon itself has built a network that might be considered a side-chain to Ethereum. The Polygon Proof of Stake network is an Ethereum Virtual Machine (EVM) compatible blockchain network that runs alongside Ethereum and features its own validator set as well as proprietary consensus algorithm optimized for a higher throughput than Ethereum’s layer 1. However, when it comes to the Polygon PoS network, it is worth differentiating it from a “pure” side-chain as it has a lot of extra features that rely on the security of the main Ethereum layer.

Most importantly, staking MATIC tokens happens on the Ethereum main chain, where the collective set of validators managed as well. If a validator begins to engage in malicious behavior such as double signing or is experiencing extensive downtime, their stake is subject to slashing. Because staking is carried out on the Ethereum smart contract, the need to trust validators is reduced as some of Ethereum security features are inherited in this pivotal process.

In the unlikely event of a collusion scenario where the majority of validators engage in malicious activities, the community can still collaborate to redeploy the contracts on Ethereum and implement a fork that eliminates the malicious validators, enabling the chain to continue operating as intended.

The network also uses a checkpointing technique to increase network security in which a single Merkle root is periodically published to the Ethereum layer 1. This published state is referred to as a checkpoint. Checkpoints are important as they provide finality on the Ethereum chain. The Polygon PoS Chain contract deployed on the Ethereum layer 1 is considered to be the ultimate source of truth, and therefore all validation is done via querying the Ethereum main chain contract. Taking this into consideration, Polygon PoS should probably be referred to as a commit chain rather than a classical side-chain.

Similarly, plasma chains also utilize a proprietary consensus mechanism to generate blocks. However, unlike side-chains, the "root" of each plasma chain block is broadcasted to Ethereum This is very similar to checkpointing on Polygon PoS but demands more communication with L1. The "root" in this context is essentially a small piece of information that enables users to demonstrate certain aspects of the L2 block's contents.

But while Polygon PoS has gained significant traction as outpriced users fled Ethereum in the search of lower transaction fees, the overall adoption of side-chains and plasma chains as a scaling technology has remained limited.

b. State Channels

The same goes for a scaling approach referred to as state channels, that enables off-chain transactions between two or more parties. State channels allow participants to engage in a series of interactions, such as payments or game moves, without requiring each transaction to be recorded on the L1 blockchain.

The process begins with the creation of a smart contract on the Ethereum main chain. This contract includes the rules that govern the interactions and specifies the parties involved. Once the contract is established, the participants can open a state channel, which is an off-chain communication channel for executing transactions.

During the state channel's lifespan, the parties can engage in multiple interactions, updating the state of the contract through signed messages. The state changes are not immediately recorded on the blockchain, but they are verified by the smart contract, which can be enforced at a later time if necessary.

When the participants are finished with the interactions, they can close the state channel and publish the final state of the contract to the Ethereum main chain, which executes the contract and finalizes the transaction. This approach reduces the number of on-chain transactions needed to complete a series of interactions, allowing for faster and more efficient processing of transactions.

While state channels initially seemed like a promising solution for scaling blockchain networks like Ethereum, state channels require a certain level of trust between the participants and there is a considerable risk of disputes arising if one party fails to follow the rules outlined in the smart contract. Therefore, state channels are primarily well suited for interactions between parties who trust each other, which limits the number of use cases the technology can feasibly support. Consequently, adoption has remained low as other scaling technologies took the spotlight.

c. Homogenous Execution Sharding

A scaling approach that many alternative L1 blockchains have chosen to take and that for quite some time seemed like the most promising solution to Ethereum’s scalability issues as well is what is referred to as homogenous execution sharding.

Homogeneous execution sharding is a blockchain scaling approach that seeks to increase the throughput and capacity of a blockchain network by splitting its transaction processing workload among multiple, smaller units (validator sub-sets) called shards. Each shard operates independently and concurrently, processing its own set of transactions and maintaining a separate state. The goal is to enable parallel execution of transactions, thus increasing the overall network capacity and speed. Harmony and Ethereum 2.0 (old roadmap only!) are two examples of scaling initiatives that have adopted or at least considered homogeneous execution sharding as part of their scaling strategy.

Harmony is an alternative L1 blockchain platform that aims to provide a scalable, secure, and energy-efficient infrastructure for decentralized applications (dApps). It uses a sharding-based approach in which the network is divided into multiple shards, each with its own set of validators who are responsible for processing transactions and maintaining a local state. Validators are randomly assigned to shards, ensuring a fair and balanced distribution of resources. The network uses a consensus algorithm called Fast Byzantine Fault Tolerance (FBFT), a variant of the Practical Byzantine Fault Tolerance (PBFT) consensus mechanism, to achieve fast and secure transaction validation across shards.

Cross-shard communication is facilitated through a mechanism called "receipts," which allows shards to send information about the state changes resulting from a transaction to other shards. This enables seamless interactions between dApps and smart contracts residing on different shards, without compromising the security and integrity of the network.

Ethereum 2.0, is a planned upgrade to the Ethereum network aiming to address the scalability, security, and sustainability issues faced by the original Proof-of-Work (PoW) based Ethereum version. The old Ethereum 2.0 roadmap proposed a multi-phase rollout, transitioning the network to a Proof-of-Stake (PoS) consensus mechanism (which we finally saw happening last fall) and introducing execution sharding to improve scalability (in the old roadmap!). Under this original plan, Ethereum 2.0 would have consisted of a Beacon Chain and 64 shard chains. The Beacon Chain was designed to manage the PoS protocol, validator registration, and cross-shard communication.

The shard chains, on the other hand, were individual chains responsible for processing transactions and maintaining separate states in parallel. Validators would have been assigned to a shard and would rotate periodically to maintain the security and decentralization of the network. The Beacon Chain would have kept track of validator assignments and managed the process of finalizing shard chain data. Cross-shard communication was planned to be facilitated through a mechanism called "crosslinks," which would periodically bundle shard chain data into the Beacon Chain, allowing state changes to be propagated across the network.

However, the Ethereum 2.0 roadmap has since evolved, and execution sharding has been replaced by an approach referred to as data sharding that aims to provide the scalable basis for a more complex scaling technology known as rollups (more on this soon!).

d. Heterogenous Execution Sharding

Heterogeneous execution sharding is a blockchain scaling approach that connects multiple, independent blockchains with different consensus mechanisms, state models, and functionality into a single, interoperable network. This approach allows each connected blockchain to maintain its unique characteristics while benefiting from the security and scalability of the entire ecosystem. Two prominent examples of projects that employ heterogeneous execution sharding are Polkadot and Cosmos.

Polkadot is a decentralized platform designed to enable cross-chain communication and interoperability among multiple blockchains. Its architecture consists of a central Relay Chain, multiple Parachains, and Bridges.

Relay Chain: The main chain in the Polkadot ecosystem, responsible for providing security, consensus, and cross-chain communication. Validators on the Relay Chain are in charge of validating transactions and producing new blocks.

Parachains: Independent blockchains that connect to the Relay Chain to benefit from its security and consensus mechanisms, as well as enable interoperability with other chains in the network. Each parachain can have its own state model, consensus mechanism, and specialized functionality tailored to specific use cases.

Bridges: Components that link Polkadot to external blockchains (like Ethereum) and enable communication and asset transfers between these networks and the Polkadot ecosystem.

Polkadot uses a hybrid consensus mechanism called Nominated Proof-of-Stake (NPoS) to secure its network. Validators on the Relay Chain are nominated by the community, and they, in turn, validate transactions and produce blocks. Parachains can use different consensus mechanisms, depending on their requirements. What is an important feature of Polkadot’s network architecture is that by design, all parachains share security with the relay chain, hence inheriting the relay chain security guarantees.

Cosmos is another decentralized platform that aims to create an "Internet of Blockchains," facilitating seamless communication and interoperability between different blockchain networks. Its architecture is kind of similar to Polkadot’s and composed of a central Hub, multiple Zones, and Bridges.

Hub: The central blockchain in the Cosmos ecosystem enabling cross-chain communication and soon inter-chain security (shared security similar to Polkadot). Cosmos Hub uses a Proof-of-Stake (PoS) consensus mechanism called Tendermint, which offers fast finality and high throughput. Theoretically, there can be multiple hubs. But especially with ATOM 2.0 and inter-chain security coming up, the Cosmos Hub will likely remain the center of the Cosmos-enabled internet of blockchains.

Zones: Independent blockchains connected to the Hub, each with its own consensus mechanism, state model, functionality and generally also validator set. Zones can communicate with each other through the Hub using a standardized protocol called Inter-Blockchain Communication (IBC).

Bridges: Components that link the Cosmos ecosystem to external blockchains, allowing asset transfers and communication between Cosmos Zones and other networks.

Both Polkadot and Cosmos are examples of heterogeneous execution sharding, as they connect multiple, independent blockchains with diverse functionality, consensus mechanisms, and state models into a single, interoperable ecosystem. This approach allows each connected chain to maintain its unique characteristics while enabling scalability by separating application-specific execution layers from each other that still benefit from the cross-chain communication and security capabilities of the entire network.

e. Scaling Ethereum on Rollups

Rollups take sharding within a shared security paradigm to the next level. It’s a scaling solution in which transactions are processed off-chain in the execution environment of the rollup and, as the name suggests, rolled up into batches. Sequencers collect transaction data on the L2 and submit the data to a smart contract on Ethereum L1 that enforces correct transaction execution on L2 and stores the transaction data on L1, thereby enabling rollups to inherit the security of the battle-tested Ethereum base layer.

So now what were essentially shards in the old Ethereum 2.0 roadmap are completely decoupled from the network and devs have a wide open space to develop their L2 “chain” however they want (similar to Polkadot’s parachains or Cosmos’ zones), while still being able to rely on Ethereum L1’s security by communicating through arbitrary smart contracts developed in a way that is optimized for the specific rollup. Another key advantage, for example compared to side-chains, is that a rollup does not need a validator set and consensus mechanism of its own.

A rollup system only needs to have a set of sequencers (performing the tasks outlined above), with only one sequencer needing to be live at any given time. With weak assumptions like this, rollups can actually run on a small set of high-spec server-grade machines, allowing for great scalability. However, most rollups try to design their systems as decentralized as possible (more on that later). Also, instead of consensus mechanisms, rollups can for example have coordination mechanisms with rotation schedules to rotate sequencers accordingly, thereby increasing security & reducing the time the (high-spec) sequencer nodes need to be online.

Generally, there are two types of rollup systems:

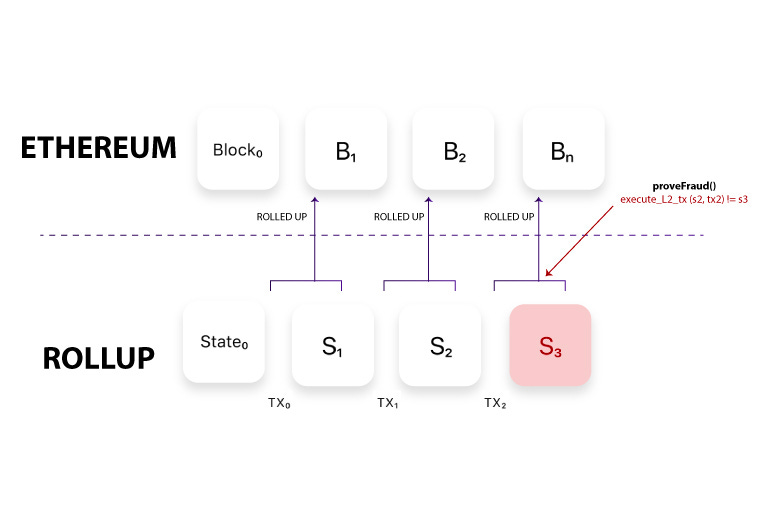

What is referred to as optimistic rollups are characterized by having a sequencer node that collects transaction data on L2, subsequently submitting this data to the Ethereum base layer alongside the new L2 state root. In order to ensure that the new state root submitted to Ethereum L1 is correct, verifier nodes will compare their new state root to the one submitted by the sequencer. If there is a difference, they will begin what’s called a fraud proof process. If the fraud proof’s state root is different from the one submitted by the sequencer, the sequencer’s initial deposit (a.k.a. bond) will be slashed. The state roots from that transaction onward will be erased and the sequencer will have to recompute the lost state roots.

Zero Knowledge rollups or zk-rollups on the other hand rely on validity proofs in the form of Zero Knowledge proofs (e.g. SNARKs or STARKs) instead of fraud proving mechanisms. So basically, similar to the optimistic rollup systems, a sequencer collects transaction data on L2 and is responsible for submitting (and sometimes also generating) the Zero Knowledge proof to L1. The sequencer’s stake can be slashed if they act maliciously, which incentivizes them to post valid blocks (or proofs of batches). The prover (or sequencer if combined in one) generates unforgeable proofs of the execution of transactions, proving that these new states and executions are correct.

The sequencer subsequently submits these proofs to the verifier contract on the Ethereum mainnet. Technically, the responsibilities of sequencers and provers can be combined into one role. However, because proof generation and transaction ordering each requires highly specialized skills to perform well, splitting these responsibilities prevents unnecessary centralization in a rollup’s design. The Zero Knowledge proof the sequencer submits to L1 reports only the changes in L2 state and provides this data to the verifier smart contract on Ethereum mainnet in the form of a verifiable hash.

Determining which approach is superior is a challenging task. However, let's briefly explore some key differences. Firstly, because validity proofs can be mathematically proven, the Ethereum network can trustlessly verify the legitimacy of batched transactions. This differs from optimistic rollups, where Ethereum relies on verifier nodes to validate transactions and execute fraud proofs if necessary. Hence, some may argue that zk-rollups are more secure. Furthermore, validity proofs (the zero-knowledge proofs) enable instant confirmation of rollup transactions on the main chain.

Consequently, users can transfer funds seamlessly between the rollup and the base blockchain (as well as other zk-rollups) without experiencing friction or delays. In contrast, optimistic rollups (such as Optimism and Arbitrum) impose a waiting period before users can withdraw funds to L1 (7 days in the case of Optimism & Arbitrum), as the verifiers need to be able to verify the transactions and initiate the fraud proving mechanism if necessary. This limits the efficiency of rollups and reduces the value for users. While there are ways to enable fast withdrawals, it is generally not a native feature.

Rollups play an especially important role in Ethereum 2.0’s rollup-centric roadmap. Along with Proof of Stake consensus, the central feature in ETH 2.0 design is what is referred to as data sharding. As per Ethereum’s rollup-centric roadmap, instead of actually processing transactions, the shards would simply store data & attest to the availability of ~250 kB sized blobs (little amounts) of data (see figure above). However, data sharding will still take some time until it’s fully implemented and deployed.

That’s where EIP-4844 comes into play. EIP-4844 (a.k.a. Proto-danksharding) provides an interim solution by implementing the transaction format that will be used in data sharding already, but not actually shards transactions yet. Instead, data from this transaction format (data blobs) is part of the beacon chain (L1) & is fully downloaded by all consensus nodes. However, to prevent state bloat, the blobs are not permanently stored and can be deleted after a short delay.

The new transaction type which is referred to as a blob-carrying transaction, is generally just like a regular transaction, except it also carries these extra pieces of data referred to as blobs. Blobs are rather large (~125 kB) & are much cheaper than similar amounts of call data that rollups would normally have to pay for when posting state roots or validity proofs to L1. Because validators & clients still have to download full blob contents, data bandwidth in proto-danksharding is targeted to 1 MB per slot instead of the full 16 MB that are targeted when data sharding is fully implemented. However, there are still large scalability gains because this data is not competing with the gas usage of other Ethereum L1 transactions.

Hence, L1 execution can be congested & expensive, while blobs remain very cheap (at least in the medium term). Finally, another benefit of the EIP4844 rollout is that while rollups have to adapt to switch to EIP-4844, they won't have to worry about adapting anymore when full data sharding is finally implemented. It’s the same transaction format, but will safely allow for additional data.

Through data sharding in the long term and EIP-4844 in the short term, Ethereum aims to address the data availability problem, which refers to the question of how peers in a blockchain network can be sure that all the data of a newly proposed block is actually available. If data is not available, a block might contain malicious transactions which are being hidden by the block producer. Even if the block contains non-malicious transactions, hiding transactions might compromise the security of the system.

This data availability problem is especially prominent in the context of rollup systems. It is very important that sequencers can make transaction data available, as the rollup needs to know about its state and users’ account balances. Data availability also introduces certain limitations to rollups on Ethereum. Even if the sequencer were an actual supercomputer, the number of transactions per second it can actually compute will be limited by the data throughput of the underlying data availability solution/layer it uses. If the data availability solution/layer used by a rollup is unable to keep up with the amount of data the rollup’s sequencer wants to dump on it, the sequencer (and the rollup) can’t process more transactions even if it wanted to.

By transforming the Ethereum base layer into a major data availability / settlement layer for an almost infinite number of highly scalable, rollup-based execution layers, the overall Ethereum network and its rollup ecosystems will enable enormous scale.

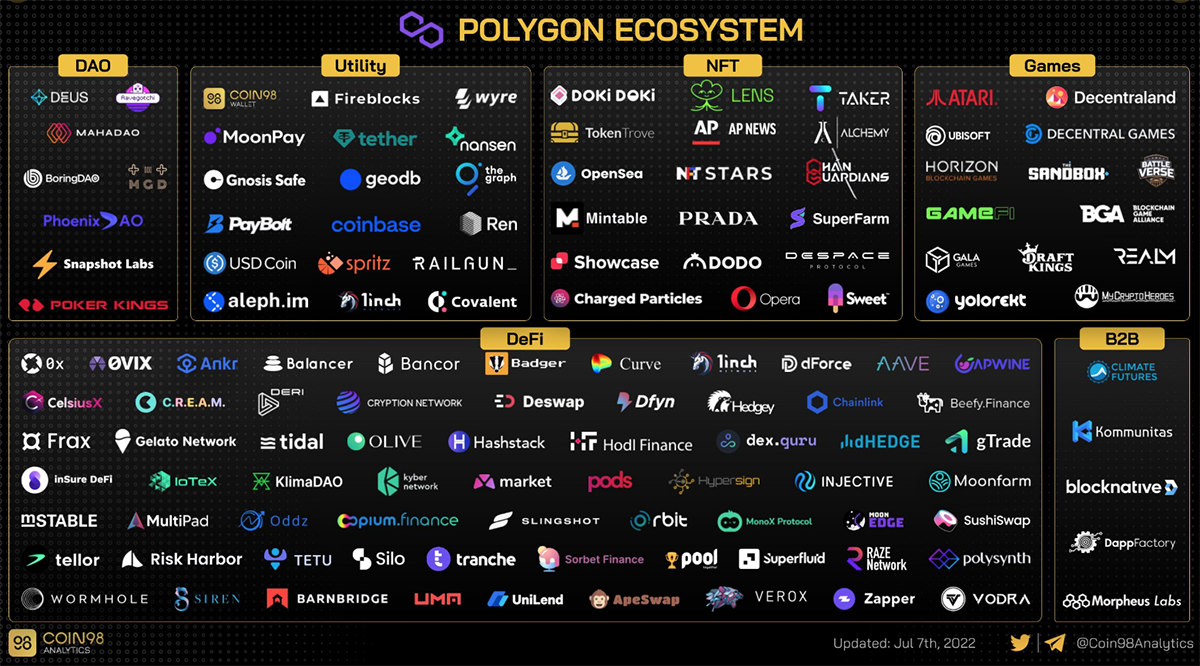

f. Polygon zk-Rollup Scaling Initiatives

Polygon has in many ways been a pioneer and a driving force behind the development of Zero Knowledge rollup technology. Since the launch of its PoS network, Polygon has pivoted to becoming an application platform providing all the tools and infrastructure needed to build various kinds of EVM-compatible layer 2 solutions. The vision includes providing developers an SDK to build various layer 2 scaling solutions, including rollup implantations such as zk-rollups, but also side or commit chain solutions like Polygon PoS and state channels. Polygon will also supply PoS validators from its existing network to support L2 infrastructure and build bridges between the various layer 2s, to become an aggregator for scalable Ethereum L2 scaling solutions.

Probably most importantly, Polygon is strongly committed to scaling Ethereum on zk-rollups and plans to continue investing in the development of zero-knowledge technology. Over the years, Polygon has launched a variety of initiatives working towards scalable and secure zk-rollup implementations that allow for generalized computation and EVM equivalence. These initiatives include Polygon Zero, Hermez, Miden, Nightfall and what is now known as the Polygon zkEVM. Let’s have a brief look at what these initiatives aim to achieve and how they some of these initiatives are the basis of the Polygon zkEVM that stands at the center of this report.

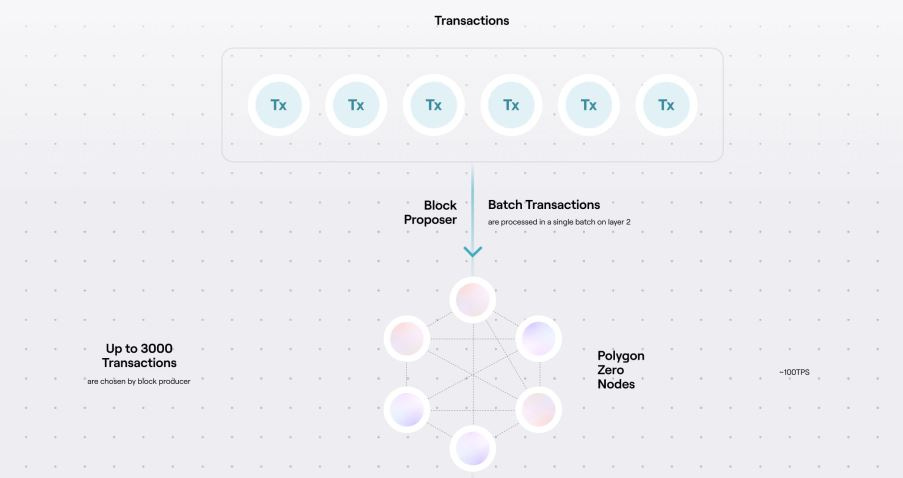

Polygon Zero is a zk-rollup solution designed to reduce the computational cost of generating validity proofs by utilizing the recursive Plonky2 proof system, a proving mechanism originally developed by the team behind the acquired Mir Protocol (for $400m). Plonky2 is said to be the cheapest and most performant proof system for proof generation / verification on Ethereum. Polygon Zero was aimed to be compatible with the Ethereum Virtual Machine (EVM) and can theoretically batch up to 3,000 transactions per block.

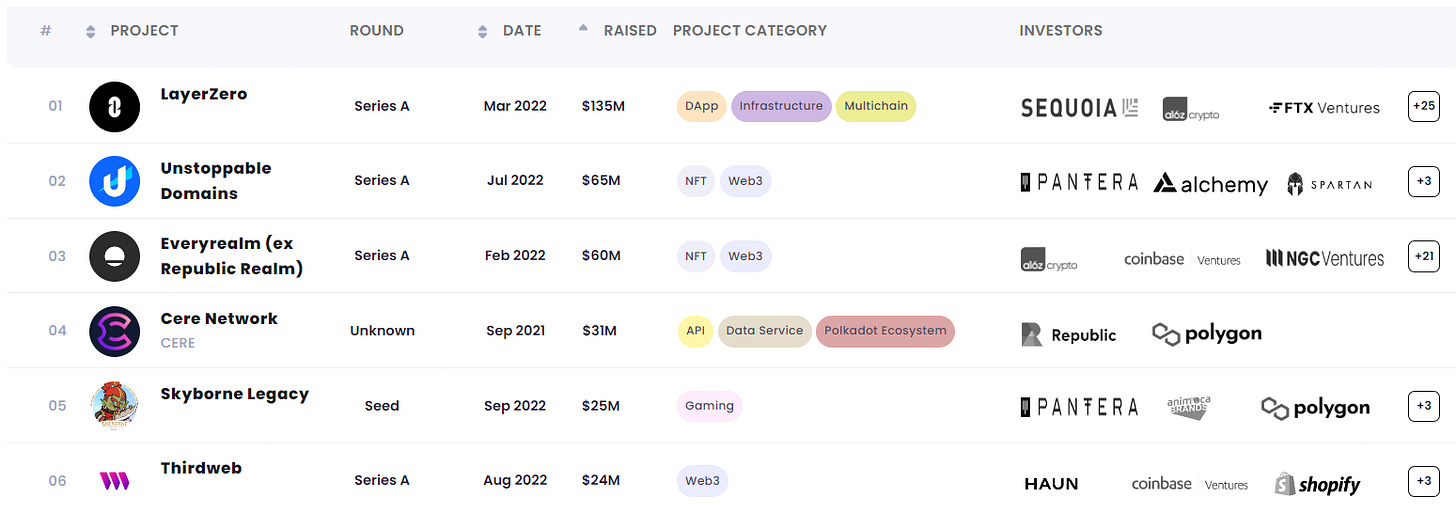

Polygon Hermez is a decentralized zk-rollup project built on the Ethereum network, with decentralization as its primary focus. It was originally acquired by Polygon for $250m and uses a novel L2 consensus algorithm called Proof of Efficiency (PoE) consisting of Sequencers and Aggregators, who work together to ensure rollup functionality. Polygon Hermez achieves significant throughput by batching up to 2,000 transactions and using SNARK proofs to validate transactions.

Polygon Miden is a general-purpose, STARK-based zk-rollup with EVM compatibility that relies on the Miden Virtual Machine (VM) to execute arbitrary logic and run smart contracts. Developers can compile Solidity or Vyper code into Miden Assembly, with the rollup being capable of processing up to 5,000 transactions per block and achieving over 1,000 TPS at launch.

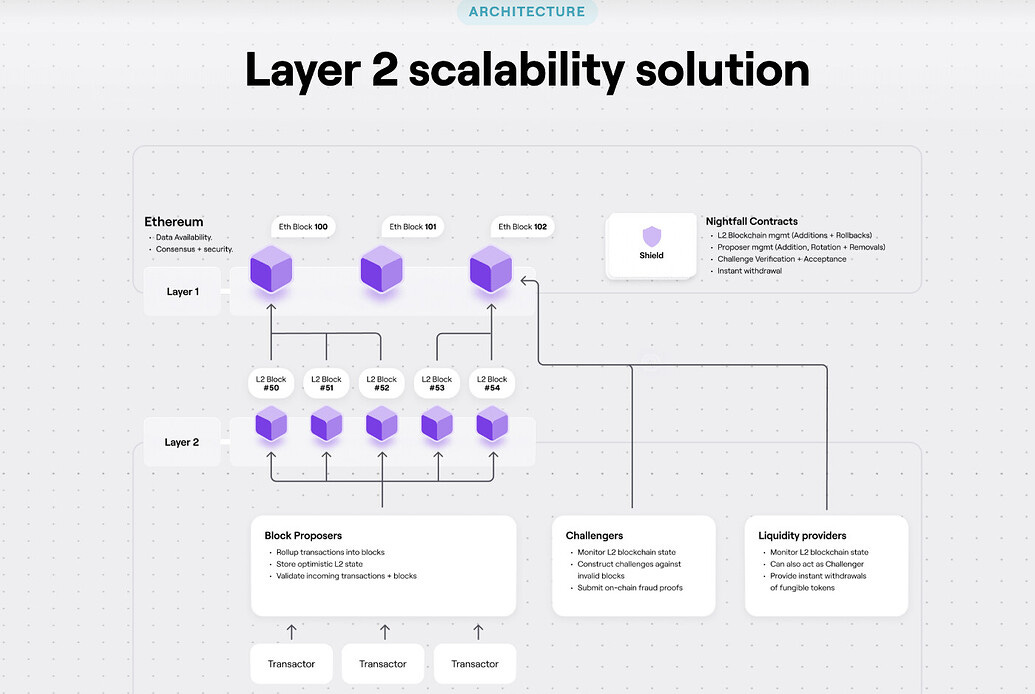

Polygon Nightfall, an enterprise rollup solution, combines optimistic rollups with zero-knowledge cryptography to offer scalable and private blockchain transactions for large-scale companies. Developed in collaboration with Ernst & Young (EY), the rollup architecture consists of Nightfall Contracts, Block Proposers, Challengers, and Liquidity Providers. Polygon Nightfall supports the secure and private exchange of various token standards and aims to deliver a 100 TPS rate for enterprise clients on Ethereum.

Hermez 2.0 or what is more commonly referred to as Polygon zkEVM, is an upgrade to Polygon Hermez, aims to introduce EVM equivalence (more on this in “Levels of EVM Equivalence”) to the existing zk-rollup tech, thereby enabling the porting of Ethereum-based dApps or launching EVM dApps directly on the rollup. Polygon zkEVM will also leverage Polygon Zero’s cutting-edge Zero Knowledge proof system plonky2 and Polygon Miden’s STARK proofs (more on this in “ZK Proof System (plonky2)”), further proving how this great technological innovation, that the zkEVM undoubtedly is, is a result of research & engineering work across various teams and areas across all the before-mentioned Polygon initiatives.

2. Deep-Dive into zk-Rollups

Now that we have established a basic understanding of zk-rollup technology, let's dive a bit deeper, explore the benefits that are inherent to zk-rollups and investigate some of the limitations and issues that exist for this nascent technology.

a. What makes zk-Rollups so exciting?

i. Scalability

The most obvious reason to be excited about zk-rollups is the enormous scale they provide. By providing an execution environment that is separated from the congested L1 execution layer and not bound by its limitations in terms of block size and transaction throughput, zk-rollups theoretically enable thousands of transactions per second.

But how does it look on the cost side of things? As we know, many users are priced out of the Ethereum L1 economy in times of high network activity as gas prices spike. Let’s have a closer look at rollup fees. As mentioned earlier, rollups need to post data on L1, hence, operating a rollup incurs a cost on Ethereum mainnet as well. Right now the cheapest data option for rollups to post data on the Ethereum base layer is call data (16 gas per byte). However, this can still become rather expensive since there is no separation of the fee market for calldata from the fee market for general execution. Consequently, when gas prices are driven up by L1 activity, posting calldata gets more expensive.

But back to rollup fees. Generally, fees on a rollup have three components: fees by the rollup for processing the transaction, batch/verification fees, and fees for posting the transaction data to L1 (as calldata). It is not unreasonable to assume that currently, 60-70% of rollup fees are attributable to fees incurred on L1.

However, based on data from L2Fees.info, rollups frequently provide transaction fees that are 5-10x lower than fees on L1 already today (see table below). In times of congestion and high gas prices on L1 the difference can be even larger. Moreover, application-specific zk-rollups, which have better data compression have even achieved ~50-100x lower fees than the Ethereum L1. With the rollout of EIP-4844 (see “Scaling Ethereum on Rollups”), rollup fees are expected to be further reduced by up to 100x, as instead of posting call data to L1, rollups post transaction data into temporary data blobs that essentially have a fee market separated from L1 execution exclusively for rollups.

However, the long-term solution to this issue remains data sharding, which will add ~16 MB per block of dedicated data space to the chain that rollups could use to post data. Rollups themselves can reduce cost by improving data compression and reducing the amount of data posted to L1. Some projects also pivot to using off-chain data availability solutions (e.g. the optimistic rollup Metis but also Validiums like Immutable).

Specifically in the context of zk-rollups, it gets even more interesting when we look at what is referred to as layer 3 rollups. WTF is a layer 3 you’re asking yourself? Well, L3 relates to L2 just as L2 relates to L1. L3 can be realized using validity proofs as long as the Zero Knowledge proof system used supports recursiveness (proofs can prove validity of other proofs, see “ZK Proof System (plonky2)”) and the underlying L2 is capable of supporting a verifier smart contract to verify said validity proofs (hence is a generalized computation layer like Polygon zkEVM).

When the L2 also uses validity proofs which are submitted to L1, this becomes an extremely scalable recursive Zero Knowledge proof structure where the compression benefit of L2 proofs is multiplied by the compression benefit of L3 proofs, enabling hyper-scalability. Moreover, On/off-ramping flows between L1 and L2 are notoriously expensive. This is different between L2 and L3, allowing for much cheaper and simpler L2-L3 interoperability.

In addition, independent L3 systems will interoperate with each other via the cheap L2, not the expensive L1, also allowing for very cheap L3-L3 interoperability. Finally, L3 structures provide developers with improved control over the technology stack, meaning that potential L3 solutions could be highly optimized application-specific systems that are customized for better application performance.

ii. Security

Aside from scalability, which is also offered by some alternative layer 1 blockchains like Solana, rollups primarily pride themselves on their security. The main distinction to alt L1 chains is that rollups do not have a proprietary validator set. Instead, thanks to the mathematical verifiability of the proofs the rollups post on L1, they effectively share security with the Ethereum base layer. This in turn means that rollup systems are essentially secured by Ethereum’s highly decentralized validator set consisting of over 500’000 validators and with a total of over USD 20bn in ETH staked.

However, despite their production use, Zero Knowledge proof systems are still relatively new, complex and they rely on the proper implementation of the polynomial constraints used to check validity of the Execution Trace. Bugs in this process can expose users to risks (see “Risks”).

Additionally, many zk-rollup implementations currently rely on a centralized sequencer. The sequencer is the only entity that can propose blocks and a live & trustworthy sequencer is vital to the health of any rollup system. A lack of decentralization can hence pose a security risk as the sequencer could go offline or censor transactions. However, zk-rollups generally have mechanisms in place to protect users and keeping up security guarantees. Since block production is open to anyone, if users experience censorship from the sequencer, they can normally propose their own blocks which would include their transactions (as is the case with Polygon zkEVM).

Additionally, if users experience censorship from the sequencer with a regular exit to L1, they can submit their withdrawal requests directly on L1. The L2 system is then obliged to service this request. Once the force operation is submitted if the request is serviced, the operation follows the flow of a regular exit (a feature also supported by Polygon zkEVM).

In summary, it is reasonable to assume that a zk-rollup implementation like Polygon zkEVM provides superior security guarantees than most alternative L1 chains that rely on security provided by smaller validator sets with less value at stake.

iii. Privacy

Finally, the reliance on zero knowledge proofs also reduces the volume of shared transaction data, thus almost making zk-rollups privacy-preserving by default. The core concept behind zk-rollups is the utilization of zero knowledge proofs, which are cryptographic techniques that allow one party (the prover) to prove to another party (the verifier) the validity of a statement without revealing any specific information about it. In the context of zk-rollups, these proofs are used to validate the correctness of a batch of transactions without disclosing the details of individual transactions.

The privacy benefits of zk-rollups stem from their ability to conceal the inputs of transactions while still proving their legitimacy. When users submit transactions to a zk-rollup, the rollup aggregates multiple transactions into a single batch and then generates a Zero Knowledge proof attesting to the validity of the entire batch. This proof is then submitted to the underlying blockchain (e.g., Ethereum) for final verification and storage.

The key advantage of this approach is that transaction data is never exposed directly on the base blockchain. Instead, the zero-knowledge proof provides a succinct and cryptographically secure representation of the batched transactions. Consequently, the details of individual transactions – such as sender and recipient addresses, amounts, and other metadata – remain private, as they are not explicitly included in the proof.

b. What are the current issues & limitations?

But, probably unsurprisingly considering how nascent Zero Knowledge rollup technology is, there are still issues and limitations that need to be addressed. This section will introduce some of the key pain points of zk-rollup-based scaling.

i. Data Availability

Data availability is the primary scaling bottleneck for Ethereum-based rollup systems and a hot topic at the frontier of blockchain scaling. But what is the data availability problem and how is it addressed? Let’s quickly recap.

The data availability problem pertains to the challenge of ensuring that all data within a newly proposed block is accessible to peers within a blockchain network. In the event that some data is unavailable, the block could potentially contain malicious transactions that are deliberately concealed by the block producer. Even if the transactions are non-malicious, concealing them could jeopardize the system's security. This issue is particularly pronounced in the context of rollup systems, where it is crucial for sequencers to have access to transaction data, as they need to be aware of the network's state and account balances. Data availability limitations also impose constraints on rollups in general.

Regardless of the sequencer's computational capabilities, the number of transactions it can process per second is ultimately restricted by the data throughput capacity of the underlying data availability solution. If the solution employed by a rollup is unable to keep pace with the volume of data that the rollup's sequencer intends to process, the sequencer (and the rollup) will be unable to handle more transactions, irrespective of its inherent technological capacity to do so.

While the before-mentioned EIP-4844 and data sharding (see ”Scaling Ethereum on Rollups”) aim to address the data availability problem on Ethereum, some projects have pivoted to using off-chain data availability solutions.

A “pure” validium for example uses zero knowledge proofs for transaction validity (like a zk-rollup) but stores transaction data off-chain with a centralized data provider as opposed to on-chain on L1. Validiums offer very low cost per transaction (because they pay less than rollups that post more data on L1), however, security guarantees are comparably weak as accessing the latest state in a validium requires off-chain data to be available. Hence, a risk that the data provider misbehaves or goes offline does exist. To address these security concerns most current validium designs utilize a Data Availability Committee (DAC) rather than a single data provider (as in the pure validium). Basically, DAC-based solutions can be thought of as validiums with multiple nodes, where nodes or members of the committee are trusted parties that keep copies of data off-chain and make data available. An example of a DAC-based validium is Immutable.

Celestiums on the other hand are a novel type of L2 network that is based on Celestia’s specialized data availability layer but uses Ethereum L1 for settlement and dispute resolution. Basically, Celestiums are a form of a permissionless DAC scaling solution with additional economic guarantees on data availability because the decentralized committee can be slashed in case of malicious behavior.

Finally, as explained earlier, “true” rollups directly use the underlying Ethereum base layer as data availability layer, which comes with strong security guarantees, but also high cost and certain limitations. While Polygon zkEVM does rely on Ethereum for data availability, it addresses the data availability problem by using a highly advanced proofing system with cutting-edge data compression capabilities. Additionally, Polygon was also working on a specialized data availability layer under the name Polygon Avail, which will enable Celestium-style network implementations. However, the project recently decoupled from Polygon and is building independently under the name Avail Project now. Interestingly, Avail is building their chain on Polkadot’s Substrate framework.

ii. Sequencer Decentralization

Many rollups use a single node called Sequencer to generate blocks on L2. This bears speed advantages as blocks can be generated in seconds as the new blocks do not need to be handed over to other nodes for verification. However, it also introduces certain centralization concerns. Especially when there is no way for permissionless participation and the team basically becomes a centralized operator of the network.

Luckily many rollups also take steps to address these centralization issues. A common approach is to use a Proof of Stake based rotation mechanism in which sequencers are chosen from a permissionless pool of sequencers. However, many argue that randomly assigning block production to single validators does not ensure a sufficient degree of decentralization. To tackle these issues, a novel consensus model called Proof-of-Efficiency (PoE) has been proposed by the Polygon Hermez team (that will also be used in the Polygon zkEVM implementation). This mechanism will be explained in detail in section “Network Architecture”.

But in many ways, the PoE mechanism is similar to the Proposer-Builder Separation (PBS) that is part of the data sharding rollout in the Ethereum 2.0 roadmap and also aims to promote decentralization in the block production / validation process. The data sharding will implement a combination of PBS and what is referred to as crList. In the form of builders, PBS creates a new role which aggregates all Ethereum L1 transactions as well as raw data from rollups into lists or block candidates and submit them to proposers. While there can be many proposers, some censorship risks still exist.

What if all proposers choose to censor certain transactions? That’s where crList comes into play. With crList, block builders can force proposers to include transactions. As a result, PBS allows third-party builders to compete for providing the best block of transactions to the next proposer and allows stakers to capture as much of the economic value of their block space as possible, trustlessly and in protocol. The crList component on the other hand is a censorship resistance mechanism to prevent proposers from abusing their magical powers and force non-censorship of user transactions.

How does PBS work exactly you ask? On a high level, builders collect transactions from the mempool and create an immutable “crList”, which is essentially a list that contains the transaction information to be included in the block. The builder conveys the list of transactions to a proposer who reorders the transactions in the crList to maximize MEV (Maximal Extractable Value). In this way, although block builders have no say when it comes to how transactions are ordered, they can still make sure all transactions coming from mempool enter the block in a censorship-resistant manner, by forcing proposers to include them. In conclusion, the proposer-builder separation essentially builds up a firewall and a market between proposers and builders.

iii. Prover Decentralization

As we know from the section “Scaling Ethereum on Rollups”, zk-rollups require an off-chain prover to generate a succinct proof for a batch of transactions. However, generating these proofs for complex smart contract transactions can be costly.

To summarize, the typical transaction flow on a zk-rollup is as follows:

Users send transactions to a centralized sequencer (coordinator) on layer 2.

The sequencer executes transactions, packs multiple transactions into a rollup block, and pre-confirms the client once the transaction is included in a block.

A (often centralized) prover generates a succinct proof for the rollup block, which is uploaded to Layer 1 with the minimum required data for verification.

The layer 1 smart contract verifies the proof and updates the state (i.e., root hash).

The fact that many zk-rollup's take a rather centralized design approach with both the prover and sequencer being centralized, leads to several issues that limit its functionality:

Limited computational power: Proof generation time is crucial for zk-Rollup, particularly for large-sized circuits (e.g., zkEVM). While customized hardware (ASIC) can significantly reduce proof generation time, the high stake in ASIC design and manufacturing makes it economically impractical for a centralized entity.

Limited community participation: Community members or users cannot join the ecosystem other than by purchasing tokens and waiting for their release from the zk-Rollup company. This makes it difficult to distribute shares fairly in a centralized setting.

Potential attack from MEV and Denial of Transactions: The sequencer orders transactions centrally, enabling frontrunning for profit (e.g., inserting bad transactions). This issue is known as "Miner Extractable Value" in blockchain. The sequencer can even refuse to include some transactions in the Rollup block.

To address these issues, Scroll (zkEVM rollup, see chapter “The zkEVM Landscape”) has proposed a layer 2 proof outsourcing mechanism to decentralize the prover. This involves engaging "miners" to generate proofs and rewarding them according to their completed proving work, which typically correlates positively with circuit size (an important parameter with regards to zk proof systems). This encourages the miner community to contribute computational power to the platform. It is essential however to note that this is not Proof of Work (PoW) but rather "volunteer computation" or "verifiable outsourced computation." To differentiate it from PoW, these actors are referred to as rollers.

In Scroll's proposed implementation, one must stake SCR tokens (or however Scroll’s native token will be called) in a smart contract to become a legitimate roller and generate proofs. An initial reputation ratio proportional to the deposit is granted, with a higher deposit yielding a higher reputation ratio. The (in this proposed design) centralized sequencer selects multiple rollers for each block based on their normalized reputation ratio and sends the block to the selected rollers to generate proofs within time limit T.

The sequencer then verifies the proofs received from the rollers. In this scheme, the reputation ratio balances a roller's stake and computational power. The stake determines the upper bound of a roller's probability of being chosen, while the reputation ratio reflects the roller's actual computational power. This mechanism ensures fairness, as not only the fastest roller but also everyone has a chance to receive rewards. To maximize a roller's profit, they will be more willing to generate proofs for different blocks in parallel. All parameters will be dynamically adjusted to the community's computational power at the time.

It should be noted that this proposed design still uses a centralized sequencer, with only proof generation being outsourced to rollers. This maintains the efficiency and speed of pre-confirmation for users, as there is no need for "consensus". This once again shows that there almost always are trade-offs between decentralization and efficiency that need to be considered (see blockchain trilemma in “History of Scaling & Polygon Scaling Initiatives”).

The Polygon zkEVM implementation will initially rely on a centralized prover (the ZKProver) with decentralization plans for the future. This will be covered in more detail in section “Network Architecture”.

c. What the f**k is a zkEVM?

Alright, we know what zk-rollups are, how they work, why they are so cool, and what limitations they are still facing. Now it is time to dive into what the Ethereum Virtual Machine is, why it is important, and how the EVM is finally coming to zk-rollups in the form of zkEVM implementations.

i. Introduction to the EVM

Firstly, let’s have a look at what the Ethereum Virtual Machine (EVM) is. So basically, the EVM is a piece of software that executes smart contracts & computes the state of the Ethereum network after a new block is added to the chain. The EVM sits on top of Ethereum’s hardware & node layer & its main purpose is computing the network's state & compiling various types of smart contract code (written in human-readable programming languages) into a machine-readable format called bytecode to run the smart contracts.

The EVM hence powers smart contract execution and is one of the core features of Ethereum. Instead of a classic distributed ledger of transactions, the EVM transforms Ethereum into a distributed state machine. Ethereum's state is a large data structure which holds not only all accounts & balances, but also an overall network or machine state which can change from block to block according to a pre-defined set of rules. At any given block in the chain, Ethereum has one & only one canonical state with the rules for valid state transition, being defined by the EVM.

To gain a clearer understanding, let's examine a simplified version of a smart contract transaction at a high level:

The contract bytecode is retrieved from the Ethereum Virtual Machine's (EVM) storage and executed by peer-to-peer nodes within the EVM. Since all nodes utilize the same transaction inputs, it ensures that each node reaches the same outcome.

EVM opcodes, which are embedded in the bytecode, subsequently interact with various components of the EVM's state (memory, storage, and stack). These opcodes carry out read-write operations, reading values from state storage and writing or sending new values to the EVM's storage.

Lastly, EVM opcodes perform computations on the values acquired from state storage before returning the updated values. This update leads to the EVM transitioning to a new state.

ii. Why EVM on L2 matters

But before we have a closer look at the zkEVM concept and protocols that build it out, let's have a look at why it is important for the overall Ethereum ecosystem to have EVM-enabled layer 2 systems (and zk-rollups in specific).

As outlined in the section “History of Scaling & Polygon Scaling Initiatives”, Ethereum’s current design lacks true scalability. The rollup-centric ETH 2.0 roadmap introduced in chapter “Scaling Ethereum on Rollups” aims to address this by transforming the Ethereum L1 in a major settlement and data availability layer for a multitude of powerful rollup-based execution layers that take computation off-chain, thereby enabling fast and cheap transactions at scale.

But as with any emerging ecosystem, it is important to find adoption among developers and enable a smooth migration for projects built on Ethereum’s L1 as well as simple deployment for new projects. Since Solidity (the EVM language) and the EVM as a whole (with all the development tools) have basically become the de-facto programming standard for dApp development across the crypto space, it is not surprising that many alternative L1s (e.g. Fantom or Harmony) and side-chains (e.g. Polygon) have decided to rely on EVM implementations to attract developers.

While opening up the design space and the number of supported programming languages is an important development focus across the industry, for now EVM support can almost be considered vital to enable a thriving ecosystem of applications in any ecosystem (with a few exceptions). Hence, for the adoption of zk-rollup ecosystems, enabling EVM support is a major step forward for sure. However, innovation will not stop here as in the future we will surely observe the emergence of other VM implementations (also on (zk-)rollups) and the building of applications via WASM or RISC-V using programming languages such as C++, Solidity, Rust, or others rather than just Solidity.

On Ethereum-based (Zero Knowledge) rollups however, the importance of having an EVM environment also comes from an ideological perspective. The ethos of most zk-rollup projects is strongly aligned with the core values & vision of Ethereum as many explicitly define themselves as a piece in the overall Ethereum scaling puzzle. Consequently, many rollup projects strive for maximized Ethereum equivalence, which also means building an EVM implementation that is as close as possible to the original EVM.

But why is specifically the zkEVM so cool? Since it is easier to build EVM implementations on top of optimistic rollup technology rather than zk-tech (due to the complex cryptography involved in computing & verifying proofs), EVM-compatible execution environments on optimistic rollups have already been around for a while, with Arbitrum, Optimism or Metis being examples. The emergence of zkEVMs will now finally enable general purpose zk-rollups as well. So far, we have only seen application-specific zk-rollups & validiums. The importance of the scalable, secure and private execution layers that zkEVM-enabled rollups can provide for entire ecosystems of composable DeFi protocols & even (application-specific) recursive rollup structures on top of zkEVMs (see “Scalability”), can’t be overstated. This is a major innovation for the entire Ethereum ecosystem and an important step in finally bringing true scale to Ethereum.

iii. Meet the zkEVM

Back in July, a trio of announcements from Scroll, zkSync & Polygon kicked off the race to mainnet, a.k.a. the zkEVM wars, as each company implied that it would be the “first” to bring the “best” zkEVM to market. Now with only 3 days left at the time of publication before the launch of Polygon zkEVM’s mainnet launch, let’s dive into how the zkEVM works and what different forms of zkEVM implementations are out there among Polygon and its competitors.

A zkEVM is an EVM-compatible virtual machine that supports zero-knowledge proof computation. Integrating these two components is a complex process, as zk-proofs necessitate a specific format (algebraic circuit) for all computational statements, which can then be compiled into STARKs or SNARKs (more on this in section “ZK Proof System (plonky2)”). Developing zkEVMs allows general-purpose rollups to utilize EVM as their smart contract engine, ensuring compatibility with the prevalent interfaces in the Ethereum ecosystem and facilitating the migration of existing contracts and tooling applications onto the rollup.

zkEVMs can be categorized into three components: an execution environment, a proving circuit, and a verifier contract. Each component plays a role in the zkEVM's program execution, proof generation, and proof verification. The execution environment is where smart contracts run within the zkEVM, functioning similarly to the EVM. The proving circuit is responsible for generating zero-knowledge proofs to validate transactions in the execution environment. Zk-rollups submit validity proofs to a smart contract deployed on the L1 chain (Ethereum) for verification through the verifier contract. The verifier then performs computations on the provided proof and confirms the accuracy of the submitted outputs based on the inputs.

Constructing zkEVMs presents several challenges, such as the inclusion of specialized opcodes, a stack-based architecture, high storage overhead, and considerable proving costs. However, upon successful implementation, zkEVMs are expected to provide quicker finality, enhanced capital efficiency, and more secure scaling. As various zkEVM projects employ different methods for combining EVM execution with zero-knowledge proof computation, we will examine some of the ongoing zkEVM projects in a later section of this report.

Prior to that, we will explore classification approaches for zkEVMs and discuss the distinctions between zkEVM compatibility and equivalence. Equivalence is often referred to as the holy grail of EVM compatibility. Reaching EVM equivalence basically means reaching full bytecode-level compatibility and it is important to understand that EVM compatibility is not the same as EVM equivalence. Settling for mere compatibility means that devs are forced to modify, or even completely reimplement, lower-level code that Ethereum’s supporting infrastructure also relies on (more on this in the next section).

3. Overview of the (zk-)EVM Rollup Space

a. Levels of EVM Equivalence

There are multiple types of EVM compatibility. High-level they can be summarized in three main buckets:

Bytecode level

Language level

Consensus level

The breakdown proposed by Vitalik Buterin is more granular and divides the types of EVM compatibility and equivalence as follows:

Type 1 - Fully Ethereum-equivalent:

The first category of zkEVMs, as defined by Vitalik Buterin, is Fully Ethereum-equivalent zkEVMs. These strive to maintain complete compatibility with the Ethereum system, without altering any aspect such as hashes, state trees, transaction trees, or in-consensus logic. This perfect compatibility offers several benefits, including the potential for maximum L1 scalability and providing rollups with the necessary infrastructure. However, the complexity of a fully Ethereum-equivalent zkEVM results in extremely high prover times.

Type 2 - Fully EVM-equivalent:

Type 2 zkEVMs aim to be EVM-equivalent but exhibit certain differences in data structures like block structure and state tree. These zkEVMs enable most Ethereum-native applications to function on the zkEVM rollup, with only a few exceptions. Type 2 zkEVMs offer faster performance than Type 1 zkEVMs but may present incompatibilities for applications verifying Merkle proofs and relying on complex, zk-unfriendly cryptography. A Type 2.5 zkEVM achieves full EVM-equivalence except for gas costs, potentially involving precompiles, the keccak opcode, and specific patterns of contract calls, memory or storage access, or reverts.

Type 3 - Almost EVM-equivalent:

Type 3 zkEVMs sacrifice equivalence to enhance prover times and simplify EVM development. Consequently, these zkEVMs may eliminate a few features that are challenging to implement in a zkEVM. While this approach generally improves prover times, it introduces new complexity as some applications may need to be rewritten.

Type 4 - High-level-language equivalent:

The final category of zkEVMs facilitates smart contract code development in a high-level programming language, allowing for significantly faster prover times. However, this approach is accompanied by substantial incompatibilities.

But while prioritizing EVM compatibility has its advantages (see “Why EVM on L2 matters” and “Meet the zkEVM”), highly EVM-compatible zkVMs are often slower and more resource-intensive than zkVMs that go for a lower degree of EVM compatibility, in order to optimize for efficiency and scalability. This tradeoff between performance and EVM equivalence is why zkSync and more prominently StarkNet have chosen a less compatible approach to building their Virtual Machines (see “The zkEVM Landscape”).

b. The zkEVM Landscape

But let’s have a look at where the leading zkEVM projects fit into these classification systems. In terms of type of zkEVM, zkSync 2.0 for example falls into the language-level bucket. Consequently, devs can write smart contracts in Solidity, but zkSync transpiles that code into a language optimized for zk-proofs called Yul behind the scenes. While Matter Labs (company behind zkSync), claims that this system was engineered to provide the rollup scalability advantages, primarily in the proofing process, by most definitions, zkSync’s EVM implementation would likely be described as EVM-compatible rather than EVM-equivalent. Not being equivalent means that zkSync potentially isn’t 1:1 compatible with every single Ethereum tool out there, which might result in additional overhead for devs. However, Matter Labs insists that this shouldn’t be an issue in the long-term. This is similar to the approach StarkWare takes with StarkNet & the programming language Cairo.

Starknet is based on the Cairo programming language, which is optimized for zk-proofs. To enable smart contracts & composability, StarkNet takes a language-level compatibility approach & transpiles EVM-friendly languages (e.g. Solidity) down to a STARK-friendly VM (in Cairo).

Scroll & Polygon zkEVM on the other hand, are both taking the more ambitious bytecode-level approach to their zkEVMs. These approaches rip out the transpiler step completely, meaning they don’t convert Solidity code into a separate language before it gets compiled & interpreted. This generally results in better compatibility with the EVM. But even here, there are distinctions that probably make Scroll more of a “true” zkEVM than Polygon’s implementation. When Polygon announced bringing the first EVM-equivalent zkEVM to market back in July, it was quickly pointed out by many that the zkEVM implementation likely better described as EVM-compatible rather than EVM-equivalent based on its specifications.

As outlined in an article by Messari, part of the “true EVM” debate follows whether the EVM bytecode is being executed directly or interpreted first & then executed. In other words, if a solution does not mirror official EVM specs, it cannot be considered a "true zkEVM". Based on this definition, Scroll might be considered a "true zkEVM" vs. the others introduced here as it aims to execute EVM bytecode directly. According to Messari, Polygon on the other hand uses a new set of assembly codes to express each opcode, the human-readable translation of bytecode, as an intermediary step which could theoretically allow the behavior of the code to be different on the EVM. Hence, overall Polygon might be a little bit further from EVM equivalence than its main bytecode competitor Scroll but still comes close (see figure below).

Similarly, Taiko aims to achieve type 1 status (see “Levels of EVM Equivalence”) as well, striving for complete Ethereum equivalence without introducing modifications to facilitate zero-knowledge proof generation. Taiko's intention is to maintain compatibility with Ethereum at the opcode level, preserving hash functions, precompiled contracts, transaction and state trees, and other in-consensus logic elements. Despite temporarily disabling certain Ethereum Improvement Proposals (EIPs) as mentioned in its whitepaper, these limitations are expected to evolve over time.

Additionally, Taiko's compatibility extends further through its use of the Go-Ethereum client, a well-established Ethereum client that offers familiarity and ease of use for participants. This compatibility allows end users to interact with Uniswap on Taiko in the same manner as on the Ethereum mainnet, thus enhancing consistency, accessibility, and user satisfaction.

However, pursuing perfect compatibility presents challenges, particularly for aspiring type-1 zkEVMs like Taiko. The primary obstacle is the slow generation of zero-knowledge proofs, as Ethereum was not originally designed with zero-knowledge proof integration in mind. Consequently, the protocol contains numerous components that necessitate extensive computation for zero-knowledge proof generation.

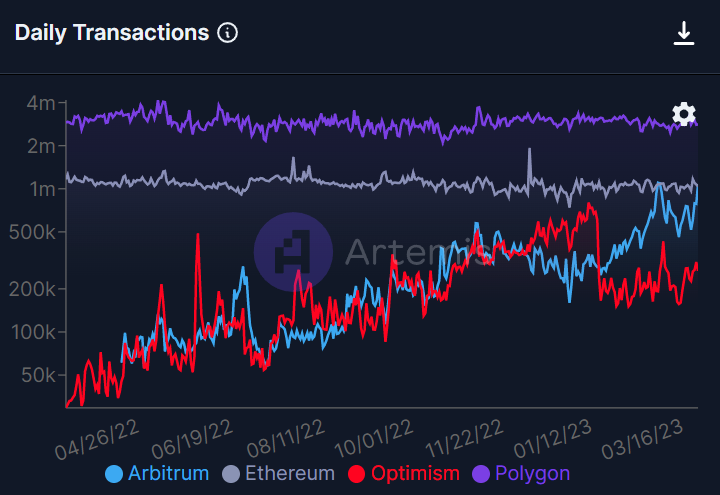

c. Optimistic EVM Rollups

Outside the “zkEVM wars”, zkEVM rollups will also face tough competition from Optimistic rollups that already had time to establish themselves in the market. There is a number of EVM-enabled optimistic rollups implementations, including Arbitrum, Optimism, Metis or Boba. This section will briefly introduce these protocols and explore to what extent they might compete with zk-rollups like Polygon zkEVM.

Optimism: Is an optimistic rollup protocol. Transactions are sent to the layer 2 and received by sequencers who have a responsibility to accurately execute the transactions they receive. For executing transactions properly, sequencers are rewarded, while also being punished if they act maliciously. If someone suspects that a sequencer has acted fraudulently, they may alert the adjudicator contract on the Ethereum mainnet.

This can verify the validity of the results produced by the sequencer using the Optimistic Virtual Machine (OVM), an Ethereum Virtual Machine (EVM) compatible execution environment built for L2-systems. If it happens that a sequencer’s results are invalid, the optimistic rollup executes a fraud proof and the sequencer’s funds are slashed. Part of the slashed funds are awarded to the whistleblower that has challenged the results of the sequencer during a period known as the “challenge period”. This period typically lasts around 1 week which results in a 1-week delay in moving assets from Optimism back to the Ethereum layer 1.

Arbitrum: Is a suite of Ethereum scaling solutions that enables high-throughput, low-cost smart contracts on L2. While Arbitrum also offers alternative scaling solutions in the form of AnyTrust, this section focuses on the Arbitrum rollup, which is currently live as Arbitrum One on mainnet. Similar to OVM, the Arbitrum Virtual Machine (AVM) supports EVM but is optimized for allowing fast progress in the optimistic case while maintaining the ability to efficiently resolve disputes.

Thanks to EVM support, porting contracts from Ethereum to Arbitrum is fast & easy. Additionally, all smart contract languages that work with Ethereum (e.g. Solidity or Vyper) work with Arbitrum. Similarly, all standard Ethereum developer tools (e.g. Truffle, MetaMask, The Graph, ethers.js) are also natively integrated with Arbitrum.

Metis DAO: Metis is a hyper-scalable optimistic rollup L2 built on top of Ethereum. Metis offers a mostly EVM-equivalent virtual machine environment to run Solidity smart contracts. By introducing a peer network and rotating the sequencer role among a network of nodes, Metis innovates in improving optimistic rollup security/decentralization. Its multiple layers of checks and balances also enable Metis to significantly lower withdrawal times. Moreover, Metis leverages MemoLabs as a storage/data availability layer, which significantly reduces transaction costs. Last but not least, Metis offers an innovative framework for Decentralized Autonomous Companies (DAC), essentially taking DAOs to the next level.

Boba Network: Is an optimistic rollup-based scaling solution that is based on the work done by Optimism but continued development focus on things like swap-based onramps, fast exit to L1 or cross-chain bridging.

There are also other optimistic rollup projects like Specular or Base (Coinbase L2 built on Optimism stack), that strive to achieve the highest possible degree of EVM equivalence. A focus that is also very prevalent in the optimistic rollup space, especially among the leading Optimism and Arbitrum. While the technological approach that optimistic rollups take differs from the one of zk-rollups, the rationale of building EVM-equivalent execution layers that share security with the Ethereum base layer is the same and hence zkEVM rollups will certainly compete for users, TVL and devs with their optimistic counterparts. While optimistic rollups have a first mover advantage, zk-Rollups introduce certain technological features that optimistic rollups cannot provide (see “What makes zk-rollups so exciting?”).

II. Presentation of Polygon zkEVM

4. Technology Deep-Dive into Polygon zkEVM

a. Network Architecture

As mentioned earlier, the Polygon zkEVM relies on the novel Proof-of-Efficiency (PoE) consensus mechanism which is an innovative approach designed to address the challenges of sequencer decentralization. This mechanism employs a two-step model that involves two types of participants: Sequencers and Aggregators.

Sequencers are responsible for collecting L2 transactions from users and forming new L2 batches. Operating in a permissionless manner, Sequencers create batch proposals and submit them as Layer 1 (L1) transactions. In order to propose a new batch, Sequencers are required to pay the gas fees for L1 transactions, as well as an additional fee in $MATIC tokens. This additional fee ensures that Sequencers have an incentive to propose valid batches containing legitimate transactions. The batch fee can vary depending on the network load and is determined by a parameter automated from the protocol smart contract.

Permissionless aggregators are the second key actor in the PoE mechanism. Their primary responsibility is to generate (via the ZKProver) and submit validity proofs for the updated L2 states based on the proposed batches from Sequencers. As batches are proposed by Sequencers and recorded as Layer 1 (L1) transactions, Aggregators monitor these proposed batches and strategically decide when to initiate proof generation. Their objective is to be the first to produce a valid proof that successfully updates the L2 state by incorporating one or more proposed batches. The PoE smart contract accepts the first submitted validity proof that updates the L2 state, making the process highly competitive among Aggregators. Those who fail to submit the winning proof may incur the cost of proof generation, but most of the gas fees can be recovered.

By allowing multiple sequencers to propose batches and multiple Aggregators to participate in the proof generation process in a permissionless way, the PoE mechanism also promotes decentralization and prevents the network from being controlled by a single entity. Furthermore, it ensures that the network remains resilient against malicious attacks, as any Aggregator can step in to create and submit validity proofs when needed.

In the Polygon zkEVM, the transaction validation and verification process is managed by a centralized zero-knowledge proof component referred to as the ZKProver. This component enforces and implements the necessary conditions for a transaction to be deemed valid. The ZKProver executes intricate mathematical calculations involving polynomials and assembly language, which are subsequently verified by a smart contract. These conditions can be regarded as constraints that a transaction must adhere to in order to modify the state tree or exit tree.

Given its complexity, the ZKProver represents the most intricate module within the zkEVM. To implement the required elements, two new programming languages were developed: the Zero-Knowledge Assembly language and the Polynomial Identity Language (will not be covered in-depth in this report though).

At a high level, the ZKProver is comprised of four primary components:

The Executor or Main State Machine Executor

The STARK Recursion Component

The CIRCOM Library

The zk-SNARK Prover

In essence, the ZKProver employs these four components to generate verifiable proofs. Consequently, the polynomial constraints or polynomial identities serve as the requisite conditions that each proposed batch must fulfill. All valid batches are required to satisfy specific polynomial constraints. A highly interesting feature of this design is the combined use of SNARKs and STARKs (more on this in section “ZK Proof System (plonky2)”).

Owing to their speed and lack of trusted setup requirements, zk-STARK proofs are used in the above-mentioned verification process. However, their size is considerably larger than that of zk-SNARK proofs. Due to this size issue and the succinctness property (refer to “ZK Proof System (plonky2)”) of zk-SNARKs, the ZKProver uses zk-SNARKs to vouch for the accuracy of the zk-STARK proofs. As a result, zk-SNARKs are published as validity proofs for state changes.

With regards to EVM compatibility, Polygon zkEVM adopts a rather ambitious bytecode-level approach in its quest for EVM equivalence. By eliminating the transpiler step, this method retains compatibility with the Ethereum Virtual Machine (EVM) without converting Solidity code into a separate language before compilation and interpretation.

Polygon's zkEVM however, while highly EVM-compatible, may not be considered fully EVM-equivalent due to its utilization of a new set of assembly codes to express each EVM opcode. This deviation could theoretically result in differences in the behavior of the code when executed on the EVM. Nevertheless, it is one of the most EVM-equivalent zkEVMs out there (closest rival being Scroll, see “The zkEVM Lanscape”).

What is also important to mention with regards to the Polygon zkEVMs network architecture is that the role that layer 3 systems will play. Recursive L3 rollup structures are a crucial part of how Polygon aims to add throughput to the zkEVM. Polygon plans on providing extensive infrastructure and there are multiple projects already working on L3 implementations.

b. ZK Proof System (plonky2)

This section will introduce the cutting-edge plonky2 proof system that Polygon zkEVM uses as a basis for its validity proofs. However, let’s first have a closer look at what SNARKs and STARKs are and how they differ from each other.

First of all, what are Zero Knowledge proofs (ZKPs) in general? ZKPs are a cryptographic technique that allow individuals or entities to prove to another that a statement is true, without revealing any information beyond the validity of the statement.

In crypto we especially care about what is referred to as non-interactive Zero Knowledge proofs (NIZKPs), a variant of ZKPs, which don't require interaction between the certifier and verifier. They rely on a common reference string (CRS), which is generated in a trusted setup ceremony that is publicly verifiable. The CRS serves as the basis for the NIZKPs and allows for the verification of statements without any interaction. However, the reliance on a CRS for NIZKPs raises concerns about the security of the system, as the CRS must be generated in a trusted setup ceremony to ensure the integrity of the system.

The trusted setup ceremony involves generating a CRS that consists of cryptographic parameters used to generate the NIZKPs used in the system. One challenge in the design of NIZKPs is finding the balance between security, efficiency, and the size of the CRS. Ideally, the CRS should be small and secure. Techniques like universal hash functions and the Fiat-Shamir transformation have been proposed to achieve this balance.

zkSNARKs are the most well-known form of zero-knowledge proofs and were first introduced by the ZCash protocol in 2012. But what are SNARKs exactly? SNARKs (Succinct Non-interactive ARguments of Knowledge) are a specific form of zero-knowledge proofs and a method of verifying the validity of a statement without revealing any information about said statement. The zkSNARK proofs in ZCash for example allow the network to verify transactions without compromising user privacy, as even the nodes enforcing consensus rules do not need to know the underlying data of each transaction, improving both user anonymity & transaction confidentiality in the network. Zk-rollups have similar privacy benefits that are outlined in the section “Privacy”.

But ZCash was not only a pioneer, ZCash’s most recent Halo proof system was also the first Zero Knowledge proof system ever discovered that supported two key features in one system:

No trusted setup

Recursion

As mentioned above, one key feature of Halo is its lack of a trusted setup. This means that the security of the system is not reliant on the integrity of a setup ceremony and thus is not vulnerable to exploitation in the event of a compromise of that ceremony. The absence of a trusted setup also enables greater protocol agility and allows for the development of novel zero-knowledge protocols removing a major complexity / risk factor that was inherent to non-interactive Zero Knowledge proof systems in the past.

The second key property that makes Halo stand out is recursion. This means that the protocol is scalable and capable of proving arbitrarily complex facts, making it a "general purpose" protocol that can be used for a wide range of zero-knowledge applications (zkApps). An important benefit that comes with that, is that proofs can verify other proofs, allowing for recursive rollup structures (e.g. application-specific L3s) on top of the generalized L2 (see chapter “Scalability”). As outlined in the previous section “Network Architecture”, L3s also play an important role in realizing Polygon zkEVM’s full scaling potential.

More recently, a proof technology that gained a lot of attention. STARKs or Scalable Transparent ARguments of Knowledge). But what makes STARK proofs interesting you ask? Well, STARKs offer a number of benefits, the main one being the elimination of the need for a trusted setup (like more advanced SNARK proof systems such as Halo). However, both SNARKs & STARKs come with some limitations & trade-offs. While the former requires a trusted setup in many cases, it is primarily the computational overhead required to generate & verify proofs that can limit the use of STARKs.